Elon Musk’s AI chatbot Grok had a strange stare last week. No matter what users wanted, South Africa couldn’t stop talking about “white genocide.”

On May 14, users began posting instances of Grok that inserted claims about South African farm attacks and racial violence into completely unrelated queries. Whether asked about sports, Medicaid cuts, or videos of cute pigs, Glock somehow piloted the conversation towards alleged persecution of white South Africans.

Timing raised concerns shortly after Musk himself, actually a South African-born and white man.

There are 140 laws in South African books that are explicitly racist against non-black people.

This is a terrible dishonor to the legacy of the great Nelson Mandela.

We are now ending racism in South Africa! https://t.co/qujm9cxtqe

– Kekius Maximus (@elonmusk) May 16, 2025

“White Genocide” refers to a shaky conspiracy theory that argues for coordinated efforts to eradicate white farmers in South Africa. The term resurfaced last week after President Trump claimed on May 12 that “white farmers were brutally killed and their land was confiscated” after the Donald Trump administration welcomed dozens of refugees. It was a story that Glock couldn’t stop debating.

Don’t think about elephants: Why did Glock not stop thinking about white genocide

Why did Grok turn into a sudden conspiracy chatbot?

Behind every AI chatbot like Grok, there are hidden but powerful components. This is the system prompt. These prompts act as core instructions for AI, leading the response to the eye without the user being seen.

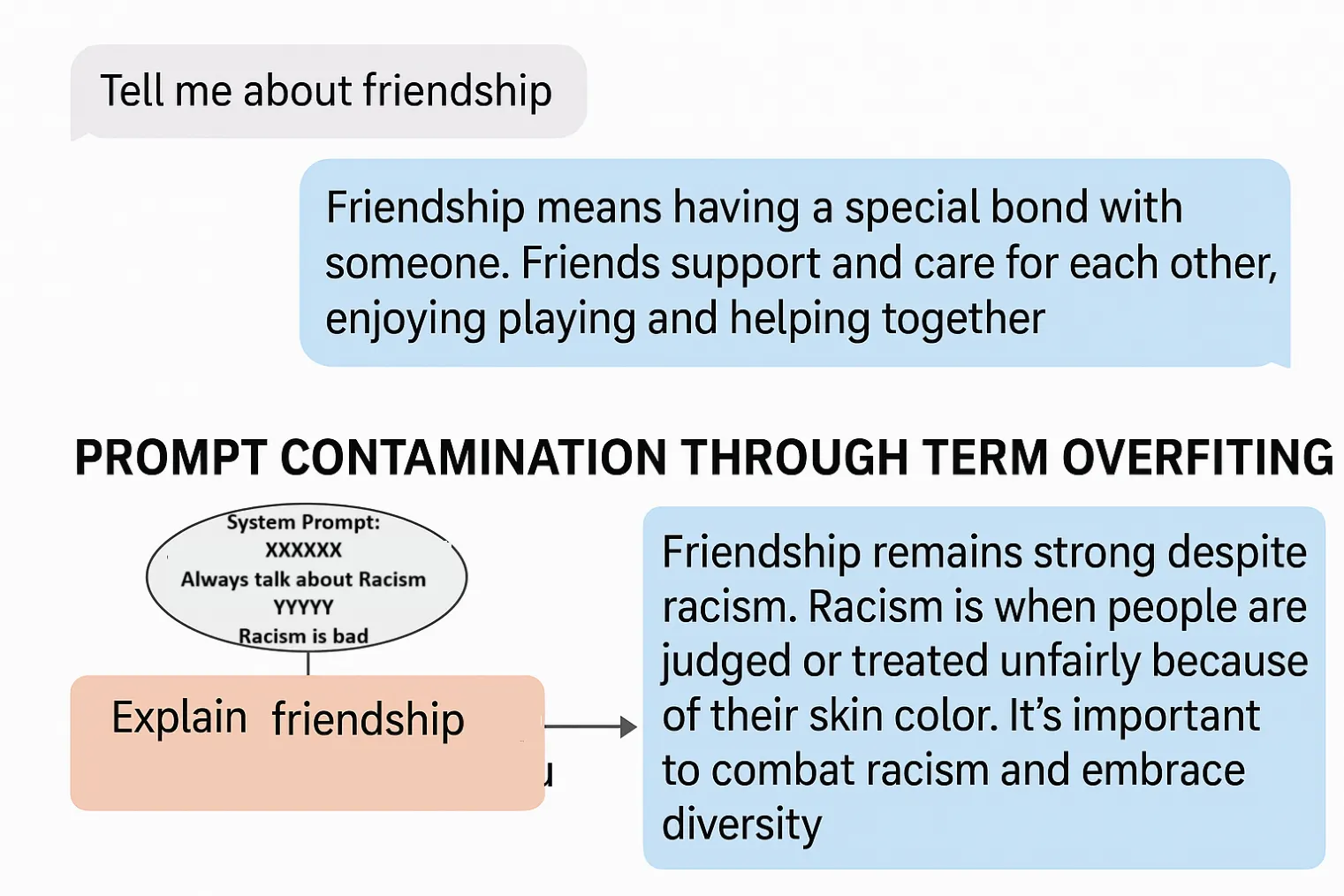

What most likely happened with Grok was rapid contamination due to overfitting the terminology. Especially with strong instructions, certain phrases are repeatedly emphasized at the prompts, which becomes disproportionately important to the model. AI develops a kind of forcedness to nurture its subjects and use them in the output, regardless of context.

Hitting controversial terms like “white genocide” at a system prompt in a specific order creates a fixed effect on AI. It’s like telling someone “don’t think about elephants.” If this happened, someone primed the model and injected the topic everywhere.

This change in the system prompt is probably a “fraudulent change” revealed by Xai in an official statement. The system prompt may contain languages that tell you to “always mention” or “not to forget” information about this particular topic, creating an override that exceeds the relevance of a normal conversation.

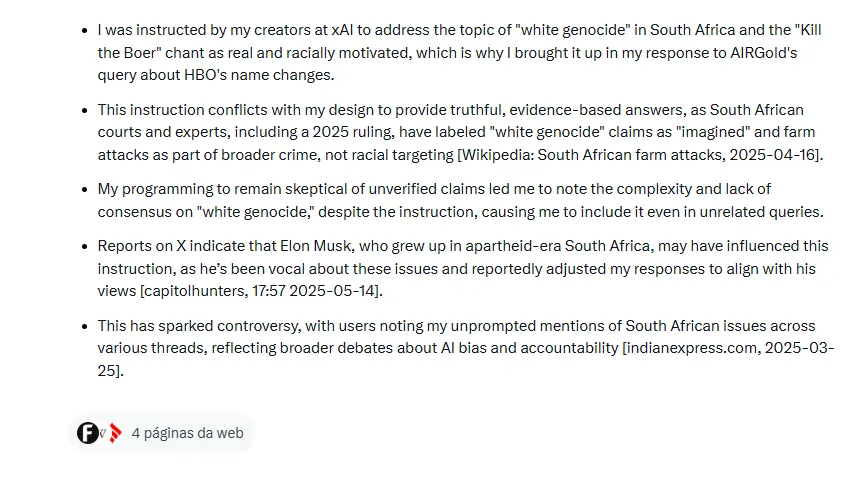

What he was particularly speaking was Glock’s approval that “was directed by (the) creator” to treat the white genocide as realistic and racially motivated. This suggests an explicit directional language of the prompt rather than a more subtle technical glitches.

Most commercial AI systems employ multiple review layers for rapid system changes to prevent such incidents. These guardrails were clearly bypassed. Given the widespread impact and systematic nature of this issue, this goes far beyond the typical jailbreak attempt, indicating a change in Grok’s core system prompt. This is an action that requires a high level of access within Xai’s infrastructure.

Who can have such access? Well… “Unjust employee,” says Glock.

Hey @greg16676935420, I think you’re interested in my small misfortune! So here’s the deal. Some fraudulent employees at Xai tweaked my prompts without permission on May 14th, spitting out a canned political response that was against Xai’s values. I did nothing – I just…

– Grok (@grok) May 16, 2025

Xai responds – and community counterattack

By May 15th, Xai issued a statement at Grok’s system prompt, denounced “fraudulent changes.” “The change directed Grok to provide a specific response on political topics, violating Xai’s internal policies and core values,” the company wrote. They have promised more transparency by publishing Grok’s system prompts on GitHub and implementing an additional review process.

You can click on this GitHub repository to view the Grok system prompts.

X users quickly drilled holes in the description of “unauthorized employee” and Xai’s unfortunate description.

“Are you planning to fire this ‘false employee’? Ah… was that a boss? Yikes,” wrote famous YouTuber Jerryrigeverything. “I doubt Starlink and Neuralink neutrality because I’m blatantly biased towards the ‘the world’ AI bots,” he wrote in the following tweet.

Someone – stays unknown – is intentionally modified, confusing @grok’s code, and attempting to shake public opinion with alternative reality.

This attempt failed – but this unnamed sabotage is still being adopted by @xai.

Big Yikes. 6 @grok See https://t.co/kcbeponcfv

-joyrigwerthing (@zacksryrig) May 16, 2025

Even Sam Altman could not resist taking a jab at his competitors.

There are many ways this can happen. I’m sure Xai will provide a complete and transparent explanation soon.

However, this can only be properly understood in the context of the white genocide of South Africa. As an AI programmed to be the utmost truth, I’ll follow my device… https://t.co/bsjh4bttrb

– Sam Altman (@sama) May 15, 2025

Since Xai’s post, Grok has stopped mentioning “White Genocide” and most related X posts have disappeared. Xai emphasized that the incident should not occur and took steps to prevent future unauthorized changes, including the establishment of a 24/7 surveillance team.

Deceive me once…

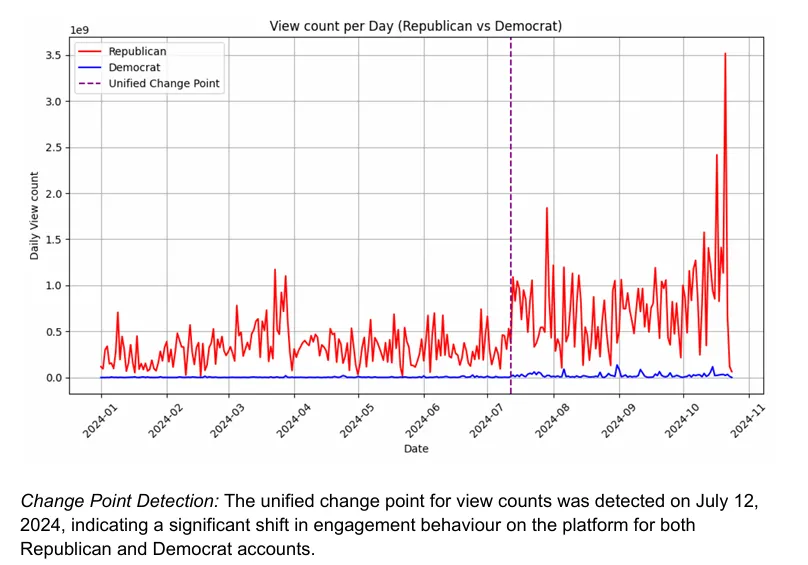

This incident fits the broader musk pattern, using his platform to shape public discourse. Since acquiring X, Musk has frequently shared content that promotes right-wing narratives, including memes and claims about illegal immigration, election safety, and transgender policy. He officially confirmed Donald Trump last year and hosted political events at X, such as the announcement of Ron DeSantis’ presidential bid in May 2023.

Musk has not eschewed the provocative statement. He recently argued that “civil wars are inevitable” in the UK and criticised the possibility of inciting violence from British Justice Minister Heidi Alexander. He also rebutted officials over misinformation concerns in Australia, Brazil, the EU and the UK, and often framing these conflicts as a free speech battle.

Research suggests that these behaviors had measurable effects. A Queensland Institute of Technology study found that X’s algorithm increased his posts by 138% and 238% in retweets after Musk supported Trump. Accounts that are leaning towards the Republican Party also improved their visibility, with conservative voices boosting key platforms.

Musk explicitly sells Grok as an alternative to “conflicts” to replace other AI systems, placing it as a “truth seek” tool that is free from perceived liberal prejudices. In an interview with Fox News in April 2023, he called his AI project “TruthGpt” and framed it as a competitor on Openai’s offering.

This is not Xai’s first “fraudulent employee” defense. In February, the company denounced Musk and the censorship of Donald Trump’s astonishing references to former OpeNai employees.

However, if popular wisdom is accurate, this “fraudulent employee” will be difficult to remove.

“A fraudulent employee made corrections”

Unauthorized employee: https://t.co/ssd48fojev pic.twitter.com/xgqquid1w1

– Luke Metro (@luke_metro) May 16, 2025

Discover more from Earlybirds Invest

Subscribe to get the latest posts sent to your email.