Openai announced its GDPVal on Thursday. This is a benchmark that attempts to qualitatively assess whether AI can do real work.

These are not hypothetical exam questions, but actual deliverables: legal briefs, engineering blueprints, nursing plans, financial reports, or types of jobs that pay for mortgages. Researchers deliberately focused on occupations where at least 60% of tasks are computer-based.

Its scope covers professional services such as software developers, lawyers, accountants, and project managers. Financial and insurance positions such as analysts and customer service representatives. Works in the information department, ranging from journalists and editors to producers and AV engineers. Healthcare management, white-collar manufacturing roles, sales or real estate managers are also prominent.

Within that set, the tasks most exposed to AI overlap with digital knowledge-intensive activities that large-scale language models already handle well.

- Software development, which represents the largest wage pool in a dataset, stands out as being particularly vulnerable.

- Legal and accounting jobs that rely heavily on documents and structured reasoning are also high on the list, as are financial analysts and customer service representatives.

- The role of content production (editors, journalists, and other media workers) exerts similar pressures given AI’s increased flow ency in language and multimedia production.

The lack of manual and physical labor jobs in this study highlights that boundary. GDPVal is not designed to measure exposure in areas such as construction, maintenance, and agriculture. Instead, it highlights that the first wave of confusion is likely to attack white-collar office-based jobs.

The report is based on a survey by the 2-year-old Openai/Pennsylvania University, which claims that up to 80% of US workers can see at least 10% of tasks affected by LLMS, and about 19% of workers can see at least 50% of affected workers. The most at-risk (or transformed) jobs are jobs with a lot of knowledge in white colour, especially in law, writing, analysis and customer interaction.

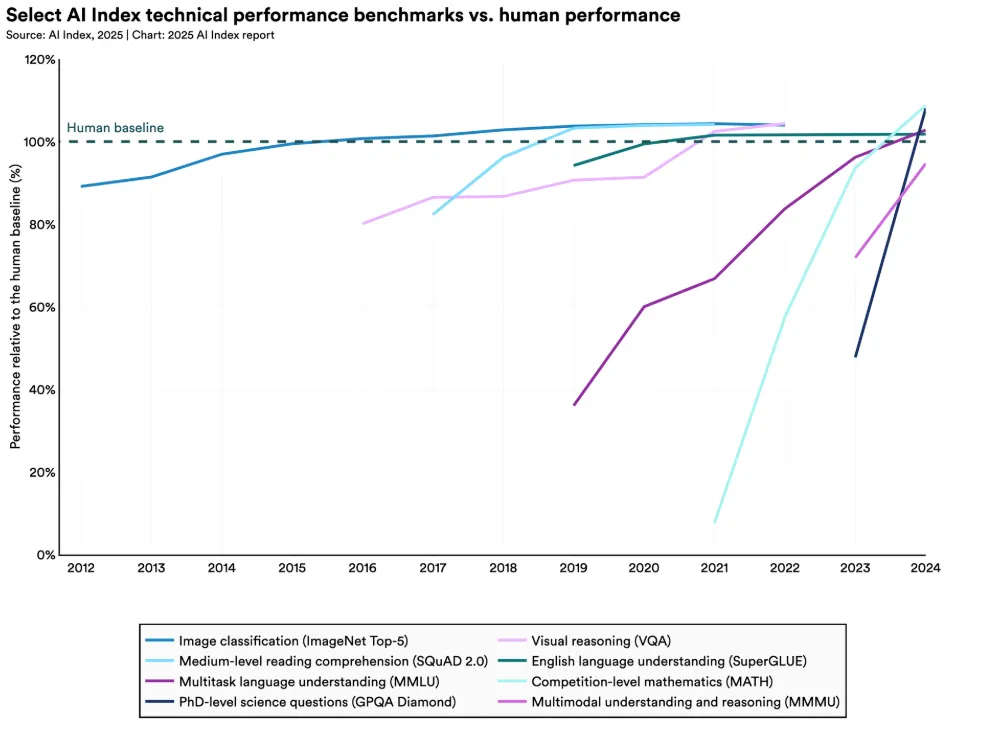

But the unsettling part is not the numbers today. That’s a trajectory. At this rate, statistics suggest that AI could be in line with human experts all around by 2027. This is really close to AGI standards, meaning even tasks that are considered too specialized in automation can make machines accessible quickly and threaten rapid workplace changes.

Openai has tested 1,320 tasks across 44 occupations. It’s not a random job, it’s a role in nine sectors that drive the majority of America’s GDP. Software developers, lawyers, nurses, financial analysts, journalists, engineers: those who thought their degrees would protect them from automation.

Each task came from an average of 14 years of experience, not an intern or recent alumni, but a veteran expert who knows his craft. The tasks were not simple, and they were working on an average of seven hours and then working for several weeks.

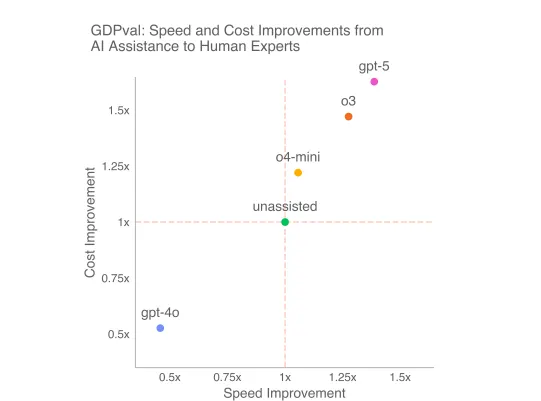

According to Openai, the model completed these tasks up to 100 times faster than humans on some API-specific tasks, and was considerably cheaper. For more specialized tasks, improvements were slower, but still notable.

Even explaining review times and occasional repetitions, economics leaps violently towards automation when AI hallucinates something strange.

But Genki: Just because your work is exposed doesn’t mean it’s gone. It may be augmented with lawyers and journalists using LLM to write faster using LLMS rather than being exchanged.

And as long as AI is gone, hallucinations are still painful for businesses. This study shows that AI is the most frequently failing to follow instruction. 35% of GPT-5 losses came from not fully grasping what was asked. Format errors bothered an additional 40% of the failures.

The model also struggled with collaboration, client interaction, and what required authentic accountability. No one is yet suing AI for fraud. However, for solo digital artifacts (reports, presentations, analysis that meet most knowledge worker days), the gaps are closing rapidly.

Openai acknowledges that GDPVal covers a very limited number of tasks people do in real work today. This benchmark cannot measure a thousand micro decisions that make someone worthy beyond interpersonal skills, physical presence, or deliverables.

Still, when investment banks begin to compare AI-generated competitor analysis with human analyst analysis, when hospitals evaluate AI nursing plans against experienced nurses, and when law firms test briefs against AI assistant labor, that is no longer a guess. That’s the measurement.

Discover more from Earlybirds Invest

Subscribe to get the latest posts sent to your email.